NVIDIA has released an alpha version of the Chat with RTX application, which allows you to run an AI chatbot based on a generative large language model (LLM) locally on your PC.

Chat with RTX

Features:

- Create summaries and relevant answers based on videos (YouTube) and text documents (PDF).

- Search by video transcript: the chatbot can find the desired fragments in the video in seconds.

- Quickly extract key information from PDF files, which can be useful, for example, when working with legal documents.

- Lag-free operation: Unlike cloud chatbots, Chat with RTX runs on your computer, providing instant response.

Specifications:

- Operating system: Windows

- Video card: NVIDIA GeForce RTX 30 or 40 series (minimum 8 GB video memory)

- Disk space: 40 GB

- RAM: 3 GB (during operation)

How it works:

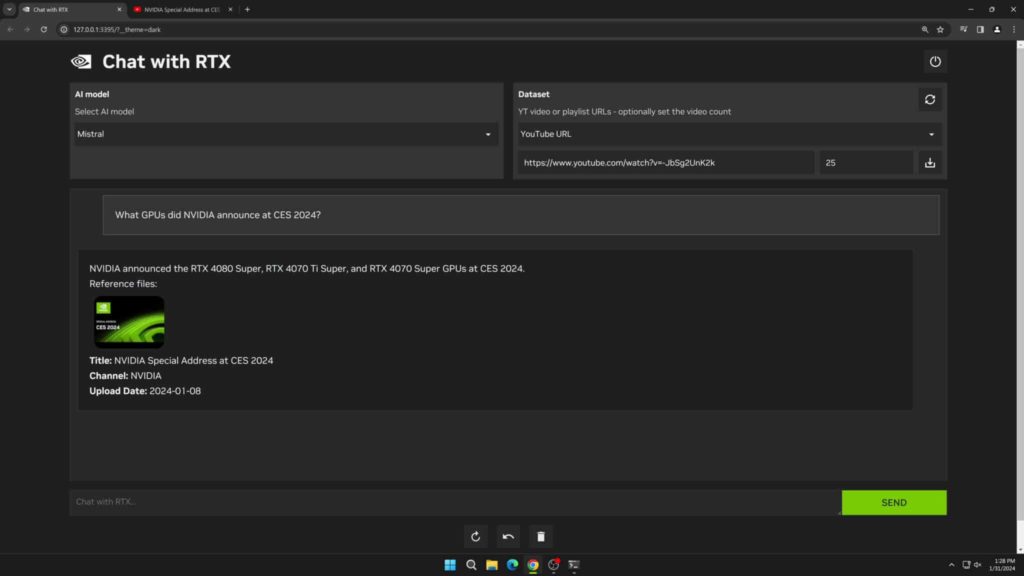

- When you install Chat with RTX on your PC, a web server and a Python instance are installed that uses the LLM Mistral or Llama 2.

- Tensor cores on the NVIDIA RTX GPU are used to accelerate request processing.

- The user accesses the chatbot through a web interface.

Limitations:

- Early stage of development: the chatbot may be unstable and buggy.

- Does not remember context: each new request is processed independently of the previous ones.

- Not suitable for large amounts of data: attempting to index more than 25,000 documents may result in failure.

Prospects:

Chat with RTX is an interesting project that demonstrates the potential of local AI chatbots. It can be useful for those who do not want to use cloud services to process their personal data.

Important:

- The current version of Chat with RTX is intended for developers and enthusiasts.

- The application requires a powerful NVIDIA RTX graphics card to run.